I’ve been playing with suffix trees recently, in particular trying to get my head around Ukkonen’s algorithm for suffix tree construction. I’ve got the hang of it now after trying out some code and checking it against this excellent video walk through of Ukkonen’s algorithm by Tushar Roy.

Tries, Radix Trees, Suffix Trees

A suffix tree stores every suffix of a string, or of several strings, and makes it easy to find prefixes of those suffixes, in linear O(m) time, where m is the length of the substring. So it lets you find substrings in O(m) time. So it can be used for full text search, and it’s often used for DNA comparison.

A suffix tree is actually a radix tree (also known as a radix trie), with suffixes inserted instead of just the whole string. A radix tree is itself a compacted trie (also known as a prefix tree).

A trie (pronounced try) is a tree with characters on the edges, letting you discover the whole string as you traverse the edges, staring with the edge from the root for the first character of the string. For instance, this lets you offer text completion if the user gives you the first few correct characters of a word.

A radix tree compacts the Trie by letting each edge have several characters, splitting the edge only when necessary to distinguish different strings. So, while a Trie containing “abc” would have a chain of root->a->b->c, a Radix Tree would have just root->abc. Adding “abd” would change that to root->ab->c, with another for root->ab->d.

A suffix tree, or radix tree, can save space by storing just the start and end indices of its strings instead of the strings themselves. Then each edge has constant size, and the space for the tree is linear O(m), where m is the length of one inserted string. Or maybe you could say its linear O(nk), for n strings of length k, where k is m considered effectively constant.

For the string “banana” a suffix tree would look like this, with both leaf nodes and intermediate end markers marked with *.

root

banana*

a*

na*

na*

na*

na*

We can add a second string in the same suffix tree, associating values with the leaf at the end of each path, letting us know which items have a particular substring. For instance, if I add “banana” (value 0) and “bandana” (value 1) to a suffix tree, I can ask about “ana” and discover that it identifies both items (value 0 and value 1), via the a->n->a path. The tree looks like this:

ban

ana (0)

dana (1)

a (0, 1)

n

a (0, 1)

na (0)

dana (1)

n

a (0, 1)

na (0)

dana (1)

dana (1)

Suffix Tree Construction

The hard part is constructing the suffix tree. When we insert one string of length m, we actually insert m suffixes. If each suffix insertion takes O(m) time, then the whole construction time is at least O(m^2).

Ukkonen’s algorithm reduces this to O(m) by iterating through the string just once from start to end and using the ideas of an implicit “global end”, the “active point” and “suffix links” to jump to wherever the rest of a smaller suffix should be inserted.

Each “phase” deals with one character from the string, in sequence, performing “extensions” within that “phase” to add suffixes that start with that character. This is useful because of the repeating sub-structure of suffix trees – each sub-tree can appear again as part of a smaller suffix.

Global End

Whenever the algorithm adds a node, that’s going to be a leaf node, meaning the edge to the leaf node will have the actual end of the inserted string. So the algorithm assigns a global end to the edge. We increment that end index each time we process the next character, so these edges get longer automatically.

Active point

The active point identifies a point on an edge in the tree by specifying a node, an edge from that node (by specifying a first character), and a length along that edge. Within every “extension”, the algorithm starts looking for the next character at the active point. Sometimes it will find the next character only because an edge got longer automatically when we incremented our global end.

Whenever it finds the next character from that active point, maybe even after stepping across an intermediate node, it sets that newly-found position as the active point. This is called Rule 3.

Whenever it doesn’t find the next character from that active point, it adds a node, splitting the edge if necessary. This is called Rule 2. Whenever it splits an edge and creates an internal node, it changes the active point to the same character in a smaller edge, and looks again for the same next character. This lets it do the same split in the smaller suffix. It keeps doing this until all the smaller suffixes have been split in the same way.

This example, with the string “banana”, shows the use of the global end and of Rule 3 and Rule 2. It doesn’t show the use of suffix links yet, because it always splits edges from the root. Note that Ukkonen’s algorithm typically appends a special out-of-alphabet “$” character to the string so it can insert leaf nodes.

b: Look from root. Add to root.

b

a: Look from root. Add to root.

b

ba (because we incremented the global end).

n: Look from root. Add to root.

ban (because we incremented the global end).

an (because we incremented the global end).

n

a: Look from root. Find it (“ana”). Set it as the active point.

bana (because we incremented the global end).

ana (because we incremented the global end).

na

n: Look from previously-found “a”, in “_a_na”. Find it. Set it as the active point.

banan (because we incremented the global end).

anan (because we incremented the global end).

nan

a: Look from previously-found “n” in “a_n_an”. Find it. Set it as the active point.

banana (because we incremented the global end).

anana (because we incremented the global end).

nana

$: Look from previously-found “a” in “an_a_na”. Not found, so add an internal node and add the $. Change the active point to same character in a smaller (suffix) edge: “a” in “n_ana”.

banana$ (because we incremented the global end).

ana

na$ (because we incremented the global end).

$

nana$

$: Look from “a” in “n_a_na$”. Not found, so add an internal node and the $.

Change the active point to same character in a smaller (suffix) edge: “a” in “_a_na$”.

banana$

ana

na$

$

na

na$

$

$: Look from “a” in “_a_na$”. Not found, so add an internal node and the $. Change the active point to root.

banana$

a

na

na$

$

$

na

na$

$

$: Look from root. Add to root.

banana$

a

na

na$

$

$

na

na$

$

$

Suffix Links

In Rule 2, when the algorithm splits an edge, adding an internal node, if sets a suffix link for that internal node, pointing to root. If it has previously set a suffix link for any other internal node, while looking for the same character (in the same “phase”), it updates the link for that previously-created internal node to point to the new node.

If the active node is not root after adding the internal node, it also changes the active node via the active node’s suffix link, taking us to a previously-created internal node. As before, this lets us jump to the smaller suffix of the same substring, to make the same split there, but suffix links let us do it even when we are not on an edge from root.

We can see this when using “banbadbans” as an example. We reach this point:

a: Look from “n” in “ba->_n_badban$”. Not found, so add an internal node and add the $. Follow the suffix link from the “n” to the “n” in “a->_n_badban$”.

ba

n

badban$

$

dban$

a

nbadban$

dban$

nbadban$

dban$

a: Look from “n” in “a->_n_badban$”. Not found, so add an internal node and add the $. Follow the suffix link from the “n” to the “n” in “_n_badban$”.

ba

n

badban$

$

dban$

a

n

badban$

$

dban$

nbadban$

dban$

a: Look from “n” in “_n_badban$”. Not found, so add an internal node and add the $. Follow the suffix link from the “n” to root.

ba

n

badban$

$

dban$

a

n

badban$

$

dban$

n

badban$

$

dban$

a: Look from root. Not found. Add to root.

ba

n

badban$

$

dban$

a

n

badban$

$

dban$

n

badban$

$

dban$

$

Example Code

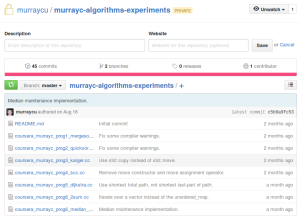

Here is some example C++ code. It’s also in github, with far more comments.

const auto key_start = key.start_;

const auto key_end = str_end(key);

ActivePoint active;

active.node = &root_;

active.edge_valid = false; // Instead of -1.

active.length = 0;

std::size_t remaining = 0;

auto end_ptr = std::make_shared<KeyIterator>(key_start);

KeyIterator& end = *end_ptr;

// The "phases"

for (auto i = key_start; i != key_end; ++i) {

++remaining;

++end;

Node* prev_created_internal_node = nullptr;

// The "extensions".

while(remaining) {

const auto edge_match = (active.edge_valid && active.length) ?

find_partial_edge(active, i) :

find_partial_edge(active.node, i);

const auto edge = edge_match.edge_;

const auto part_len_used = edge_match.edge_part_used_;

if (!edge_match.char_found_) {

KeyInternal prefix(i, end_ptr);

// Rule 2 extension: There is no match:

if (part_len_used == 0) {

active.node->append_node(prefix, value);

} else {

auto extra_node = edge->split(part_len_used);

extra_node->append_node(prefix, value);

extra_node->suffix_link_ = &root_;

if (prev_created_internal_node) {

prev_created_internal_node->suffix_link_ = extra_node;

}

prev_created_internal_node = extra_node;

if (active.node != &root_) {

active.node = active.node->suffix_link_;

} else {

--active.length;

++active.edge;

}

}

--remaining;

continue;

}

// Rule 3 extension:

active.node = edge_match.parent_node_;

active.edge = edge->part_.start_;

active.edge_valid = true;

active.length = part_len_used;

break;

}

}

Generic Suffix Tree in C++

However, the basic Ukkonen’s algorithm just stores one string. Before trying Ukkonen’s algorithm, I wrote a templated C++ suffix tree that can contain multiple strings (see the test code), with a simple O(m^2), or possibly O(m^3) construction time.

I created a version that uses Ukkonen’s algorithm, reducing construction to O(m) time, but it doesn’t support multiple inserts. In this implementation, I’ve avoided the need for extra “$” leaf nodes. I’ve also stored the suffix links in a hash table, to avoid wasting space per node even after construction, though that replaces a true constant-time read/write with an amortized constant time hash table insert/lookup.

I’m trying to adapt the Ukkonen-based suffix tree to support multiple inserts. Usually, to create such a “generalized” suffix tree with Ukkonen’s algorithm, you concatenate the strings together, separated by unique out-of-alphabet characters (“$”, “#”, etc), insert the whole thing, then prune the paths you don’t need, but I’d like to avoid that.

I’m not suggesting that anyone use this code for serious work. For now, it’s littered with asserts() and debug output and it’s in need of optimization because it’s generalized for any standard C++ container, such as std::string. For instance, it doesn’t seem wise to store std::string::iterators, instead of pointers, for the start/end ranges on edges. I’m also bothered by the use of std::shared_pointer<>s to share the “global end”, which still take space per edge even after construction.

Alternative Algorithms

Update: I’ve just watched Erik Demaine’s 2012 MIT “Strings” lecture about suffix trees (I needed to watch his previous “Static Strings” lecture first). He doesn’t mention Ukkonen’s algorithm, but does mention a “DC3 (Difference Cover 3)” algorithm by Kärkkäinen-Sanders-Burkhardt (at least the lecture’s student notes call it that) for building suffix arrays in linear time. He shows that a suffix tree can then be constructed from a suffix array by using the LCP (Longest Common Prefix) of the suffix array to build a cartesian tree, again in linear time. So the whole suffix array construction would be linear time. Suffix arrays themselves anyway offer better data locality than suffix trees and this DC3 algorithm apparently allows parallelization. I hope I find time to explore all that sometime in code.

He also mentions the use of a “suffix tray” to store the edges in each node, to get O(P + lg(Σ))) query time, much the same as the O(P) time you’d get with an array, while reducing the overall space complexity to O(T) instead of O(TΣ ). Here P is the size of the query string, T is the size of the text being searched, and Σ is the size of the alphabet. He also mentioned instead using a combination of hash tables and van Emde Boas trees to get O(P + lglg(Σ)) query time. Those parts of the lecture felt quite rushed to me, so I’d like to investigate that sometime too if I find time.